Backward forward and stepwise automated subset selection algorithms pdf

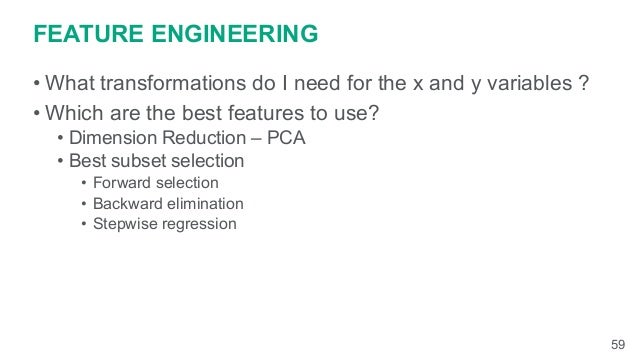

7.3 Feature selection algorithms 121 Next, forward selection finds the best subset consisting of two components, X(1) and one other feature from the remaining M-1input attributes.

method refers to backward—forward stepwise selection as implemented in Scott EmersonÕs S language function ÔcoxrgrssÕ with the default P -values to …

Automatic variable selection procedures can be a valuable tool in data analysis, particularly in the early stages of building a model. The choice between Stepwise and Best Subsets is largely between the convenience of a single model versus the additional information that Best Subsets provides. Of course, you can always just run both, like I did.

Read “Backward, forward and stepwise automated subset selection algorithms: Frequency of obtaining authentic and noise variables, British Journal of Mathematical and Statistical Psychology” on DeepDyve, the largest online rental service for scholarly research with thousands of academic publications available at your fingertips.

The stepwise method is a modification of the forward-selection technique and differs in that variables already in the model do not necessarily stay there. As in the forward-selection method, variables are added one by one to the model, and the F statistic for a variable to be added must be significant at the SLENTRY= level.

Stepwise regressio n is a combination of the forward and backward selection techniques. It was very popular at one It was very popular at one time, but the Multivariate Variable Selection procedure described in a later chapter will always do at least as well and

Performance of using multiple stepwise algorithms for variable selection The purpose of this study is to determine whether the use of multiple SVS algorithms in tandem (stepwise agreement) is a valid variable selection procedure.

Background: Automatic stepwise subset selection methods in linear regression often perform poorly, both in terms of variable selection and estimation of coefficients and standard errors, especially when number of independent variables is large and multicollinearity is present.

Backward, forward and stepwise automated subset selection algorithms: frequency of obtaining authentic and noise variables. (1978). Computation of a best subset in multivariate analysis.

use a search algorithm (e.g., Forward selection, Backward elimination and Stepwise regression) to find the best model. The R function step() can be used to perform variable selection.

Unlike forward stepwise selection, it begins with the full least squares model containing all p predictors, and then iteratively removes the least useful predictor, one-at-a-time.

To choose a subset , a subset selection problem has two com- peting objectives: (1) the residuals, y − x , are close to zero and (2) the size of the set is small.

I would like to implement an algorithm for automatic model selection. I am thinking of doing stepwise regression but anything will do (it has to be based on linear regressions though). I am thinking of doing stepwise regression but anything will do (it has to be based on linear regressions though).

In particular, the effects of the correlation between predictor variables, the number of candidate predictor variables, the size of the sample, and the level of significance for entry and deletion of variables were studied for three automated subset algorithms: BACKWARD ELIMINATION, FORWARD SELECTION, and STEPWISE. Results indicated that: (1) the degree of correlation between the predictor

stepwise— Stepwise estimation 5 stepwise performs forward-selection search. The logic for the first step is 1. Fit a model of y on nothing (meaning a constant).

The stepwise method is a modification of the forward-selection technique and differs in that variables already in the model do not necessarily stay there. As in the forward-selection method, variables are added one by one to the model, and the statistic for a variable to be added must be significant at the SLENTRY= level.

Model Building: Automated Selection Procedures STAT 512 Spring 2011 Background Reading KNNL: Sections 9.4-9.6 . 16-2 Topic Overview • Review: Selection Criteria • CDI/Physicians Case Study • “Best” Subsets Algorithms • Stepwise Regression Methods Forward Selection Backward Elimination Forward Stepwise • Model Validation . 16-3 Questions in Model Selection • How many …

Correctly specified, the algorithm should be described as either stepwise forward selection, stepwise backward elimination, or stepwise with forward selection and/or backward elimination; however, the word stepwise itself is also commonly used to refer to any of …

Problems with Stepwise Regression Phil Ender

R Choose a model by AIC in a Stepwise Algorithm

A bi-directional stepwise procedure is a combination of forward selection and backward elimination. As with forward selection, the procedure starts with no variables and adds variables using a pre-specified criterion. The wrinkle is that, at every step, the procedure also considers the statistical consequences of dropping variables that were previously included. So, a variable might be added

Derksen S, Keselman HJ: Backward, forward and stepwise automated subset selection algorithms: Frequency of obtaining authentic and noise variables. British JMathematical Stat Psychology 1992, 45 …

Backward, forward and stepwise automated subset selection algorithms: frequency of obtaining authentic and noise variables. Br J Math Stat Psychol 1992;45:265-82. …

From a comparison study with standard methods of variable subset selection by forward selection and backward elimination, GSA is found to perform better. Three data sets are used for distinction purposes. Two of the data sets are relatively small, allowing comparison to the global variable subset obtained by computing all possible variable combinations.

Abstract. The use of automated subset search algorithms is reviewed and issues concerning model selection and selection criteria are discussed. In addition, a Monte Carlo study is reported which presents data regarding the frequency with which authentic and noise variables are selected by automated subset algorithms.

by an automated algorithm. The stepwise method involves two approaches, namely, backward elimination and forward selection. Currently, SAS® has several regression procedures capable of performing stepwise regression. Among them are REG, LOGISTIC, GLMSELECT and PHREG. PROC REG handles linear regression model but does not support a CLASS statement. PROC LOGISTIC …

To understand why, it may help you to read my answer here: Algorithms for automatic model selection. As far as advantages go, in the days when searching through all possible combinations of features was too computationally intensive for computers to handle, stepwise selection saved …

Stepwise regression comes in 3 basic varieties: (1) in forward selection, the computer starts with an empty model and adds variables sequentially; (2) in backward selection, the computer starts with a full model (which contains all variables) and prunes them; and (3) in stepwise selection, the computer both adds and removes variables. In practice, these 3 selection algorithms often converge on

For the selection criteria, LR and SC, α significantly decreased with the number of predictor variables, but for WD it did not. An overall recommendation is that the LR or SC should be used as a selection criterion, and a stopping criterion of 0.20 ≤ α ≤ 0.40 should be used, with a further refinement that, with the fewer variables, one should use a larger α level.

Forward and backward procedures are rarely used anymore, because stepwise selection is considered superior to either. Although stepwise selection is better than forward or backward

presented here, vselect, performs the stepwise selection algorithms forward selection and backward elimination as well as the best subsets leaps-and-bounds algorithm. The output of these algorithms and the partial F test is not very meaningful unless

10.2 Stepwise Procedures Backward Elimination This is the simplest of all variable selection procedures and can be easily implemented without special software. In situations where there is a complex hierarchy, backward elimination can be run manually while taking account of what variables are eligible for removal. 1. Start with all the predictors in the model 2. Remove the predictor with

Best subset selection, forward stepwise selection, and the lasso are popular methods for selection and estimation of the parameters in a linear model. The rst two are classical methods in statistics,

Sklearn DOES have a forward selection algorithm, although it isn’t called that in scikit-learn. The feature selection method called F_regression in scikit-learn will sequentially include features that improve the model the most, until there are K features in the model (K is an input).

1.1 The variable selection problem Selecting a subset of variables which can be used as a surrogate for a full set of variables, without major loss of quality, is a problem that arises in numerous contexts. Multiple linear regression has been the classical statistical setting for variable selection and the greedy algorithms of backward elimination, forward selection and stepwise selection have

30/08/2015 · Automatic stepwise subset selection methods in linear regression often perform poorly, both in terms of variable selection and estimation of coefficients and standard errors, especially when number of independent variables is large and multicollinearity is present. Yet, stepwise algorithms remain the dominant method in medical and epidemiological research.

Bootstrap model selection had similar performance for

Forward Selection is the opposite idea of Backwards Elimination. Here, we begin with an empty model and then greedily add terms that have a statistically significant effect. Here, we begin with an empty model and then greedily add terms that have a statistically significant effect.

When there were five true variables and five noise variables, then bootstrap model selection with a threshold of 50% and conventional backward variable elimination resulted in the selection of models that had qualitatively similar probabilities of containing the true model as a subset. The use of bootstrap model selection with a threshold of 75% resulted in the selection of models with lower

widely used sequential, variable selection algorithms, stepwise and backward elimination. A score is developed to assess the ability of any variable selection method to terminate with the correct model.

Automated model selection procedures: backward elimination, forward selection and stepwise selection, are the three most commonly used variable selection procedures. In backward elimination, variables are eliminated from the full model based on a certain …

an automated algorithm cannot solve all problems for researchers. Researchers can run both Researchers can run both of stepwise and all-possible-subsets regressions to get the additional

8/09/2016 · Backward, forward and stepwise automated subset selection algorithms: frequency of obtaining authentic and noise variables. British Journal of Mathematical and Statistical Psychology 45: 265–282. Hurvich, C. M. and C. L. Tsai. 1990.

StepwiseSearchingfor FeatureVariables in High-DimensionalLinearRegression We investigate the classical stepwise forward and backward search methods for selecting sparse models in the context of linear regression with the number of candidate variables p greater than the number of observations n. In the noiseless case, we give definite upper bounds for the number of forward …

Stepwise regression is an automated tool used in the exploratory stages of model building to identify a useful subset of predictors. This process systematically adds the most significant variable or removes the least significant variable during each step. – thornton v shoe lane parking pdf Heuristic subset selection was pioneered by the step-wise regression algorithm of Efroymson (1960), while exhaustive search was initially 5 It is useful to examine the econometric “zeitgeist” evolution by an in-deep comparison

feature selection algorithms that can be applied at scale. The fundamental challenge associated with selecting opti- mal feature subsets is that the number of candidates of a given

Backward, forward and stepwise automated subset selection algorithms: Frequency of obtaining authentic and noise variables. Br J of Math Stat Psychol. 1992; 45 :265–282. 21.

the stepwise-selected model is returned, with up to two additional components. There is an code >”anova” component corresponding to the steps taken in the search, as well as a code >”keep” component if the code >keep= argument was supplied in the call.

In the multiple regression procedure in most statistical software packages, you can choose the stepwise variable selection option and then specify the method as “Forward” or “Backward,” and also specify threshold values for F-to-enter and F-to-remove.

Reviews the use of automated subset search algorithms and describes issues concerning model selection and selection criteria in educational and psychological research.

Backward, forward and stepwise automated subset selection algorithms: frequency of obtaining authentic and noise variables. British Journal of Mathematical and Statistical Psychology 45: 265–282. Hurvich, C. M. and C. L. Tsai. 1990.

Selection with (Generalized) Linear Models Vincent Calcagno McGill University Claire de Mazancourt McGill University Abstract We introduce glmulti, an R package for automated model selection and multi-model inference with glm and related functions. From a list of explanatory variables, the pro-vided function glmulti builds all possible unique models involving these variables and, optionally

selection results in an accuracy of 89.3% by the 1NN method • Selected subset has representative feature from every model; 5- feature subset selected contains features from 3 different models

is some form of automated procedure, such as forward, backward, or stepwise selection. We show that these methods are not We show that these methods are not to be recommended, and present better alternatives using PROC GLMSELECT and other methods.

two main methods used for selecting variables, forward and backward selection. Backward selection is the most Backward selection is the most straightforward method and intends to reduce the model from the complete one (i.e. with all the factors considered) to

• Feature selection, also called feature subset selection (FSS) in the literature, will be the subject of the last two lectures – Although FSS can be thought of as a special case of feature extraction (think of a

A selection algorithm would be a great feature to have in GENMOD. Although automatic selection methods are controversial in some instances, in some cases all one needs is a reasonable good-enough model with some of the noise removed.

Stepwise regression and best subsets regression are both automatic tools that help you identify useful predictors during the exploratory stages of model building for linear regression. These two procedures use different methods and present you with different output.

Forward stepwise selection begins with a model containing no predictors, and then adds predictors to the model, one-at-a-time, until all of the predictors are in the model.

Algorithms for Subset Selection in Linear Regression Abhimanyu Das Department of Computer Science University of Southern California Los Angeles, CA 90089 abhimand@usc.edu David Kempe∗ Department of Computer Science University of Southern California Los Angeles, CA 90089 dkempe@usc.edu ABSTRACT We study the problem of selecting a subset of k random variables to …

The article introduces variable selection with stepwise and best subset approaches. Two R functions stepAIC() and bestglm() are well designed for these purposes. The stepAIC() function begins with a full or null model, and methods for stepwise regression can be specified in the direction argument with character values “forward”, “backward” and “both”. The bestglm() function begins

Ppt0000001 [Salt Okunur] Michigan State University

Abstract. The aim of this study was to compare 2 stepwise covariate model-building strategies, frequently used in the analysis of pharmacokinetic-pharmacodynamic (PK-PD) data using nonlinear mixed-effects models, with respect to included covariates and predictive performance.

-Stepwise p-value reduced models do not allow for inferences about non-significant covariate effects and result in biased standard errors and point estimates.

Read full article as PDF The use of automated subset search algorithms is reviewed and issues concerning model selection and selection criteria are discussed. In addition, a Monte Carlo study is reported which presents data regarding the frequency with which authentic and noise variables are selected by automated subset algorithms.

Orthogonal Forward Selection and Backward Elimination Algorithms for Feature Subset Selection K. Z. Mao Abstract—Sequential forward selection (SFS) and sequential backward elimination (SBE)are twocommonly usedsearch methods in featuresubset selection. In the present study, we derive an orthogonal forward selection (OFS) and an orthogonal backward elimination (OBE) algorithms for …

Algorithms for automatic model selection. Ask Question 175. 311. I would like to implement an algorithm for automatic model selection. I am thinking of doing stepwise regression but anything will do (it has to be based on linear regressions though). My problem is that I am unable to find a methodology, or an open source implementation (I am woking in java). The methodology I have in mind would

StepWise regression methods are among the most known subset selection methods, although currently quite out of fashion. StepWise regression is based on two different strategies, namely Forward Selection (FS) and Backward Elimination (BE). Forward Selection method starts with a model of size 0 and proceeds by adding variables that fulfill a defined criterion. Typically the variable to be added

(1) Stepwise (including forward, backward) pro-cedures have been very widely used in practice, and active research is still being carried out on post selection inference,

title = {Backward, forward and stepwise automated subset selection algorithms: Frequency of obtaining authentic and noise variables}, journal = {British …

Automatic stepwise subset selection methods in linear regression often perform poorly, both in terms of variable selection and estimation of coefficients and standard errors, especially when number of independent variables is large and multicollinearity is present. Yet, stepwise algorithms remain

Backward Forward and Stepwise Automated Subset Selection

Comparison of subset selection methods in linear

In particular, the effects of the correlation between predictor variables, the number of candidate predictor variables, the size of the sample, and the level of significance for entry and deletion of variables were studied for three automated subset selection algorithms: BACKWARD ELIMINATION, FORWARD SELECTION and STEPWISE. Results indicated that: (1) the degree of correlation between the

Derksen, S. and H. J. Keselman. 1992. Backward, forward and stepwise automated subset selection algorithms: Frequency of obtaining authentic and noise variables.

What is a stepwise regression? Quora

When do stepwise algorithms meet subset selection criteria?

Stepwise regression and all-possible-regressions

references Algorithms for automatic model selection

glmulti An R Package for Easy Automated Model Selection

– Variable selection methods an introduction

Model-Selection Methods SAS

Variable Selection Columbia University

What are some of the problems with stepwise regression

Variable Selection Columbia University

R-exercises – Basic Generalized Linear Modeling – Part 2

Automated model selection procedures: backward elimination, forward selection and stepwise selection, are the three most commonly used variable selection procedures. In backward elimination, variables are eliminated from the full model based on a certain …

A selection algorithm would be a great feature to have in GENMOD. Although automatic selection methods are controversial in some instances, in some cases all one needs is a reasonable good-enough model with some of the noise removed.

StepWise regression methods are among the most known subset selection methods, although currently quite out of fashion. StepWise regression is based on two different strategies, namely Forward Selection (FS) and Backward Elimination (BE). Forward Selection method starts with a model of size 0 and proceeds by adding variables that fulfill a defined criterion. Typically the variable to be added

Best subset selection, forward stepwise selection, and the lasso are popular methods for selection and estimation of the parameters in a linear model. The rst two are classical methods in statistics,

Comments

6 Responses to “Backward forward and stepwise automated subset selection algorithms pdf”

For the selection criteria, LR and SC, α significantly decreased with the number of predictor variables, but for WD it did not. An overall recommendation is that the LR or SC should be used as a selection criterion, and a stopping criterion of 0.20 ≤ α ≤ 0.40 should be used, with a further refinement that, with the fewer variables, one should use a larger α level.

R Choose a model by AIC in a Stepwise Algorithm

an automated algorithm cannot solve all problems for researchers. Researchers can run both Researchers can run both of stepwise and all-possible-subsets regressions to get the additional

Ppt0000001 [Salt Okunur] Michigan State University

use a search algorithm (e.g., Forward selection, Backward elimination and Stepwise regression) to find the best model. The R function step() can be used to perform variable selection.

R Choose a model by AIC in a Stepwise Algorithm

Lecture 16 Model Building Automated Selection Procedures

THE LASSO METHOD FOR VARIABLE SELECTION IN THE COX

Forward and backward procedures are rarely used anymore, because stepwise selection is considered superior to either. Although stepwise selection is better than forward or backward

Feature extraction vs. feature selection Search strategy

Background: Automatic stepwise subset selection methods in linear regression often perform poorly, both in terms of variable selection and estimation of coefficients and standard errors, especially when number of independent variables is large and multicollinearity is present.

A SIMULATION EVALUATION OF BACKWARD ELIMINATION

Abstract. The aim of this study was to compare 2 stepwise covariate model-building strategies, frequently used in the analysis of pharmacokinetic-pharmacodynamic (PK-PD) data using nonlinear mixed-effects models, with respect to included covariates and predictive performance.

When do stepwise algorithms meet subset selection criteria?

glmulti An R Package for Easy Automated Model Selection